Current Status:

Project XY deploys his smart contracts (be it Ligo, SmartPy or Archetype). Everything works great. Users can see the compiled Michelson on Better Call Dev and even via thebsite simply fork it.

But the option to verify the contract source code(with the ABI and ByteCode) on etherscan so that after veryfing the solidity code is displayed for everyone is not possible on Tezos.

Quote from Alexander Eichhorn: @Alex

This level of verification does not (yet) exist in Tezos. Although the Michelson bytecode can have type annotations there is still a huge gap between actual source (smartpy, etc) and Michelson. I’m not aware of any Tezos compiler that adds version info into the bytecode (there is a more or less well used field in the tzip 16 metadata, but few contracts actually use tzip16 or add the compiler version there). As explorer we’d be interested to link original source to a published contract, but there’s no easy way for us to locate/identify the original source or automate the verification process.

Only thing you have for free in Tezos is the standardized ABI interface and type info for the contract’s storage. Both are explicitly published as Micheline structures together with the code of the contract (see parameters and storage sections in contract scripts)

What users cant do(especially non code/non Michelson savy users) is to verify the contract source before interacting. Why is this so important?

Well because…

- the advertised contract code may be different from what runs on the blockchain.

- smart contract verification enables investigating what a contract does through the higher-level language it is written in, without having to read Michelson which is a stack-based language.

- to provide additional transparency

- it adds ultimately to the “trustless” ethos - and smart contracts are designed to be “trustless”. So a user shouldnt trust but be able to verify the smart contract source code

- verified smart contracts can be more easy used/changed by others (becuase it is not only Michelson thats available) = this is a benefit for the whole ecosystem

Why is Michelson not sufficient enough for that matter?

Michelson forms the lowest level of a Tezos smart contract. The Michelson code is what will be deployed on the Tezos network. However, while reading or writing a Michelson code is accessible for small smart contracts, it can become pretty tedious and complicated to use or read, for more complex smart contracts.

Most of the people know this feature from the Ethereum Blockchain. This is an excerpt from the Ethereum documentation how the process works on Ethereum:

Deploying a smart contract on Ethereum requires sending a transaction with a data payload (compiled bytecode) to a special address. The data payload is generated by compiling the source code, plus the constructor arguments of the contract instance appended to the data payload in the transaction. Compilation is deterministic, meaning it always produces the same output (i.e., contract bytecode) if the same source files, and compilation settings (e.g. compiler version, optimizer) are used.

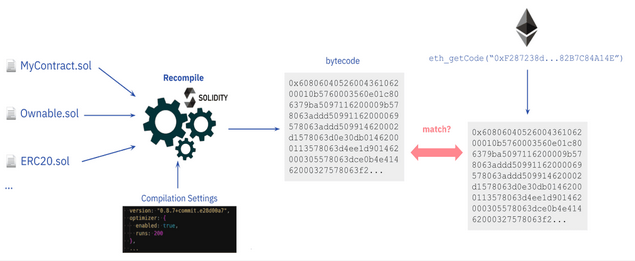

Verifying a smart contract basically involves the following steps:

1. Input the source files and compilation settings to a compiler.

2. Compiler outputs the bytecode of the contract

3. Get the bytecode of the deployed contract at a given address

4. Compare the deployed bytecode with the recompiled bytecode. If the codes match, the contract gets verified with the given source code and compilation settings.

5. Additionally, if the metadata hashes at the end of the bytecode match, it will be a full match.

So this basically looks like this

I am (unfortunately) not competent enough to judge if this is a good way but I suppose that the Core developers might know if this is a feasible solution to enable this on Tezos too. Or if another approach is needed. It would be great if Core developers would take this on or someone else like for example @G_B_Fefe

Once this possibility is added to Tezos Block Explorers like Tzstas or Better Call Dev etc can add this source verification option so users can easy verify the contract. Maybe also other tools will emerge from this possibility like there are on Ethereum (e.g. Sourcify or Tenderly)