Tarides made further progress on the Tezos storage projects in December 2021, and we’re proud to report our achievements as we continue to develop fast, reliable storage components for Tezos.

Tarides strives to improve the performance and scalability of the Tezos storage as the chain’s activity grows. Our efforts in December focused mainly on reducing the number of I/O operations performed by Octez nodes (via a feature we call the structured keys) and extending the protocol to provide Merkle proofs to be used by proxies, light-client, and Layer-2 Rollups.

We extend special thanks to the engineers and technical writers who contributed to this report, as it wouldn’t be possible without all their hard work. If you are new to Irmin, please read more on our website Irmin.org.

Improve the Performance

Improve irmin-pack IO Performance

In order to reduce the number of I/O operations, we progressed on the structured keys implementation of irmin-pack. First, to understand the effects of this optimisation, we updated both the record/replay benchmarks and the irmin-pack stats, and we also ran benchmarks on 100k and 200k commits with different indexing strategies. This suggests a performance improvement with the minimal indexing strategy: only adding the commits to index and duplicating all nodes and contents under the commits. Despite our initial concerns, this duplication doesn’t lead to a notable increase in disk space, and it significantly improves the I/O stats and hence the overall perf of the node.

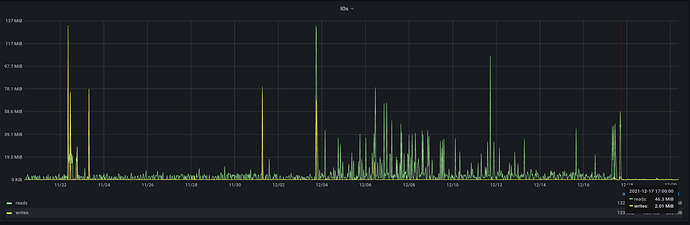

We also ran a successful bootstrapping with a Tezos branch that had this new feature. This new mode is very promising! Below are the I/O stats (rolling node, running with the minimal strategy using an imported snapshot) with the transition to the new patch, added a few days ago, shown in red:

The structured keys required some changes that were incompatible with the layered store’s existing implementation, so we temporarily disabled the layered store. This doesn’t impact Tezos because it’s not yet used in production. We’ve now started working on a new implementation.

Relevant links: mirage/irmin#1659, mirage/irmin#1664, mirage/irmin#1644, mirage/irmin#1647.

Improve Snapshot Export/Import

In order to reduce the memory consumption of a snapshot import, we proposed a patch that uses sequences instead of loading in-memory large lists. This is temporary until we develop a new snaphot format to import large directories in smaller chunks, which should reduce both the memory and the time of the import.

Relevant links: tezos/tezos#2217, tezos/tezos#4076.

Record/Replay of the Tezos Bootstrap Trace

The code to record and replay a trace was moved from Irmin to the Tezos codebase. We are fixing the last details on the working branch here, and we regularly rebase and test this over Tezos#master.

Continuous Benchmarking Infrastructure

We’ve been working on Equinoxe, a CLI tool for Equinix (ex Packet), to deploy machines from the command line, and we released the first version equinoxe.0.1.0. The library exposes OCaml bindings to the Equinix API that the OCurrent pipeline can use to deploy machines for benchmarks and CI jobs.

We now also monitor our Tezos node running on AWS and post metrics through Prometheus and Grafana.

We also made some improvements to the frontend used to display benchmarking results on each PR: better metrics display, adding the PR title as a GitHub link, and display min/max/avg in the tool tip for multi-value data points.

Finally, we started working on a project that uses OCluster to schedule benchmarking work on different machines. We exposed the internal library ocluster_worker to experiment with a different kind of worker that builds images and then runs them with custom settings, i.e., the OCaml version or the number of CPUs to use. Setting this would allow for reproducible and stable benchmarks.

Relevant links: current-bench#229, current-bench#219, current-bench#224,

equinoxe.0.1.0,ocluster#151, current-bench#257.

Merkle Proofs

Optimised Merkle Proofs

Merkle Proofs are a light way to share partial chain states between parties. These can be used to implement efficient proxies, light clients (i.e., clients without storage components) or more complex Layer-2 Rollups.

With the release of Irmin 2.9.0, we proposed an initial implementation of Merkle proofs with Merkle trees that can have some subtrees replaced by their hash. In December 2021, we worked on improving the original proposal by adding a second API for Merkle proofs for streaming. In both approaches, we started with the root node hash, applied a sequence of operations on the tree, and returned the root hash for the resulting tree. Streaming proofs guarantee stronger properties on ordering operations and the minimality of the proof. This API is part of Irmin 2.10.

Relevant links: mirage/irmin#1670, mirage/irmin#1673, mirage/irmin#1691.

Improve Tezos Interoperability

Rust Bindings for Irmin

We are preparing a release of the Rust bindings to coincide with the release of Irmin 3.0. In addition to Rust bindings, the library libirmin proposes Python bindings to Irmin. As examples of these bindings, we list all paths that contain contents in the Tezos context from Python and Rust.

Relevant links: zshipko/libirmin/examples/tezos.py, zshipko/libirmin/examples/tezos.rs

Maintain Tezos’ MirageOS Dependencies

General Irmin Maintenance

We worked on improving the Irmin CLI before the next Irmin release. To provide better support for the Tezos stores, we’re adding Irmin branches in Tezos, so whenever the Tezos node updates its head, this propagates to the Irmin’s branch store.

The structured keys feature isn’t well-supported by the irmin-graphql and irmin-http APIs yet. We are working on adding support for them, but this won’t block the release of Irmin 3.0.

Also, we’re experimenting with a compact hashtable layout for elements with an immediate runtime representation, which could be used for the index in-memory log.

Relevant links: tezos/tezos#4012, CraigFe/compact.

Verify Existing Bits of the Stack Using Gospel

We are continuing our work on a PPX for the Monolith test framework.

On Gospel, we worked on checking pattern-matching exhaustiveness and making Gospel’s AST cleaner. On Ortac, we worked on deriving equality from types (polymorphic equality is not what we want for specifications checking) and on a better intermediate representation for types.

Relevant links: n-osborne/ppx_monolith, ocaml-gospel/gospel#122

Future Focus

The release of Irmin 3.0 is our main focus for the beginning of this new year. The structured keys feature, the main change introduced by Irmin 3.0, uses considerably less I/O and improves the overall performance of the node, so we will focus on its integration in Tezos.

We’ll also investigate how to improve the snapshot import/export and continue our work on automating the benchmarks.

We’re proud of the progress made in December and all of 2021, and we look forward to publishing public reports more frequently in 2022 for the benefit of the Tezos community.